Context Engineering for AI Agents

Lessons from building Manus - an AI agent framework that leverages in-context learning of frontier models

"Context engineering is still an emerging science—but for agent systems, it's already essential."

The Choice: End-to-End vs In-Context Learning

At the beginning of the Manus project, we faced a key decision:

- Train an end-to-end agentic model using open-source foundations

- Build an agent on top of the in-context learning abilities of frontier models

Our choice: Bet on context engineering

Why? Ship improvements in hours instead of weeks, and keep our product orthogonal to underlying models

We call our manual process of architecture searching, prompt fiddling, and empirical guesswork "Stochastic Graduate Descent"

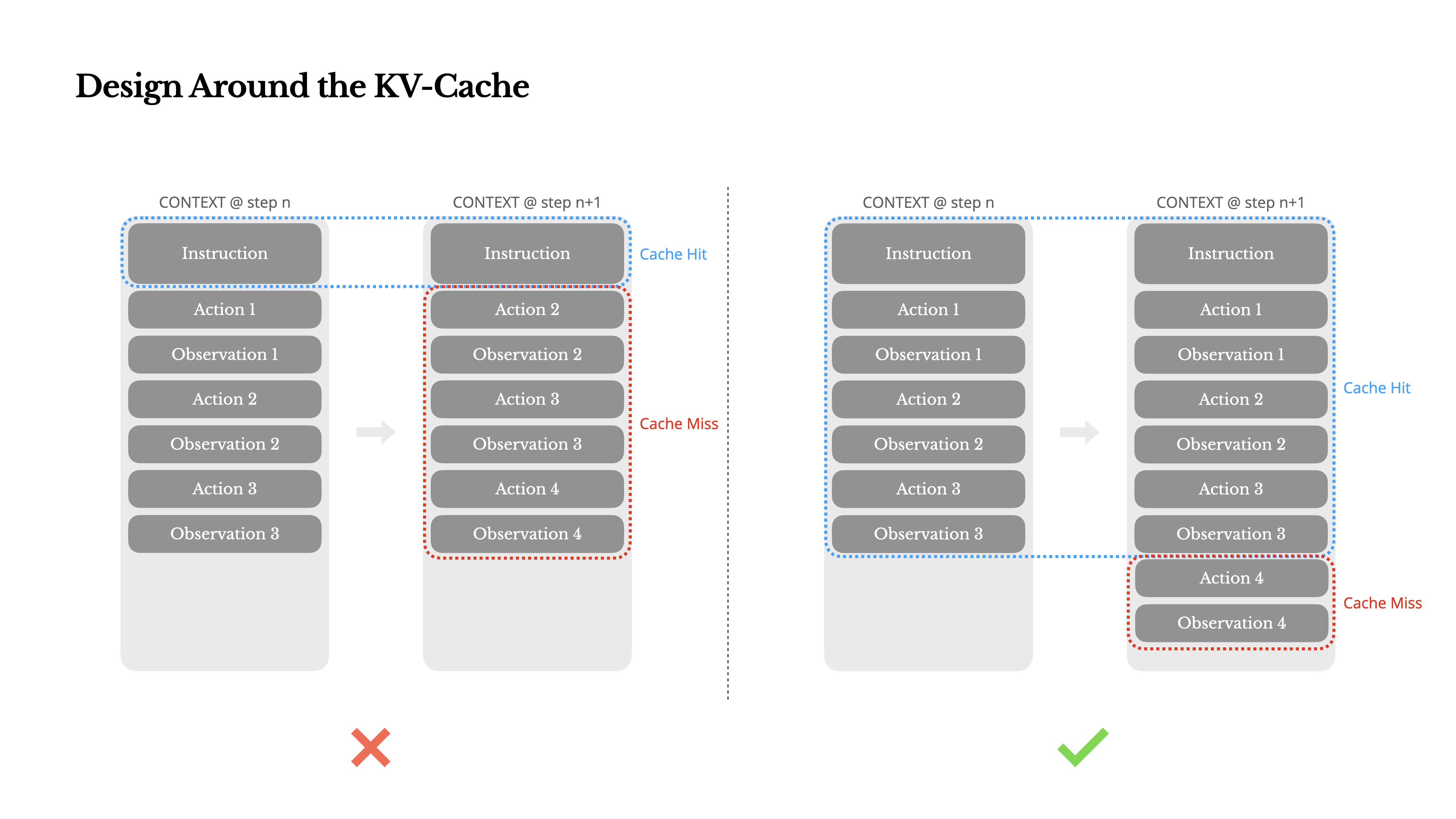

Design Around the KV-Cache

The single most important metric for a production-stage AI agent: KV-cache hit rate

Why it matters:

- Directly affects latency and cost

- Context grows with every step, output remains short

- Average input-to-output token ratio: ~100:1

Cached input tokens cost 0.30 USD/MTok vs 3 USD/MTok for uncached - a 10x difference!

Improving KV-Cache Hit Rate

Key Practices:

- Keep your prompt prefix stable - Even a single-token difference can invalidate the cache

- Make your context append-only - Avoid modifying previous actions or observations

- Mark cache breakpoints explicitly - When needed, account for potential cache expiration

Common mistake: Including a timestamp at the beginning of the system prompt kills your cache hit rate

If self-hosting models using frameworks like vLLM, ensure prefix/prompt caching is enabled

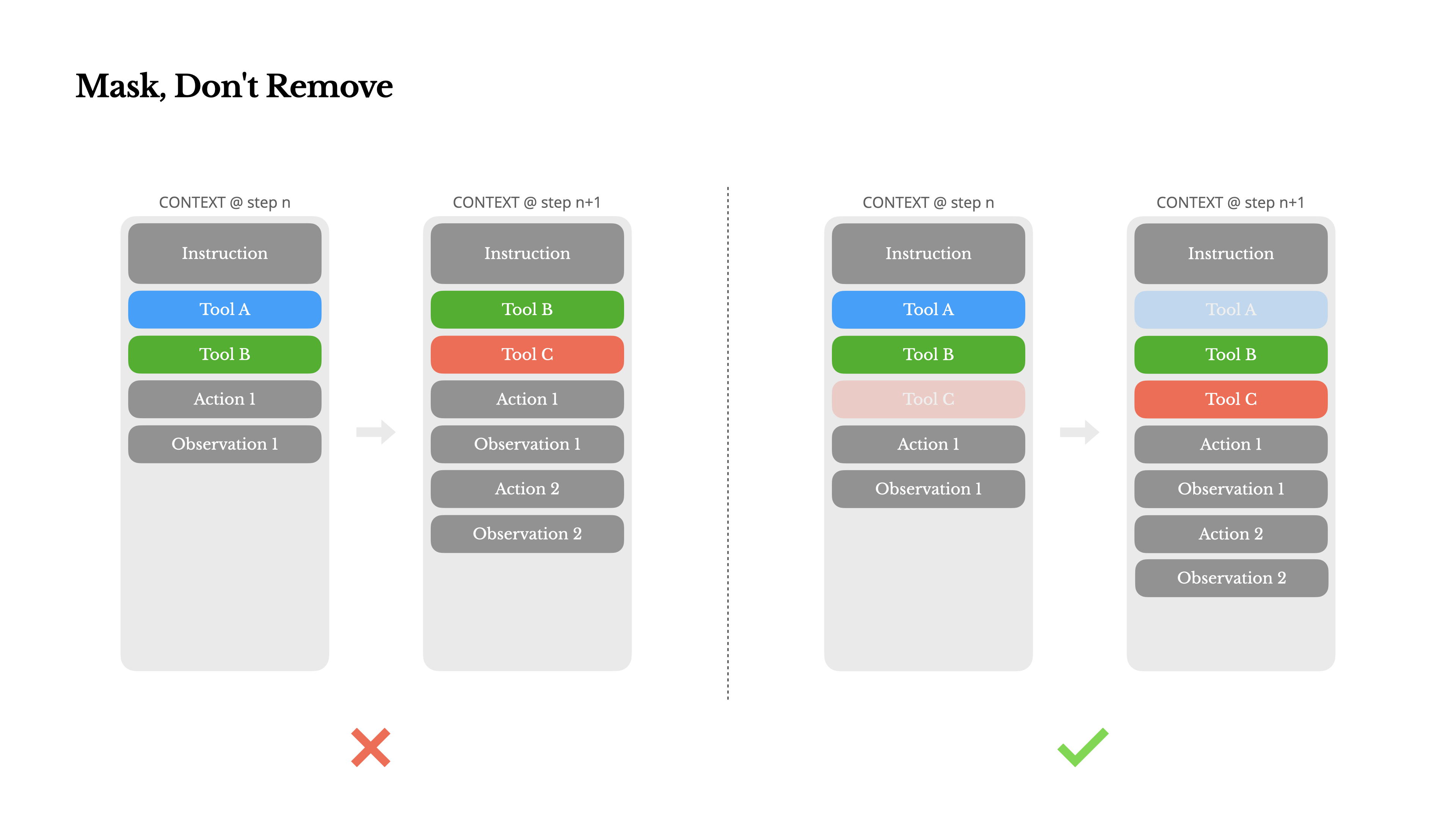

Mask, Don't Remove

As agents gain more capabilities, their action space grows more complex

Dynamic action spaces (like RAG-based tool loading) cause problems:

- Changes invalidate KV-cache for subsequent actions

- Model gets confused when previous actions refer to undefined tools

Manus uses a context-aware state machine to manage tool availability by masking token logits during decoding

Function Calling Modes

We constrain action selection by masking token logits directly:

Auto

Model may choose to call a function or not

Prefixed with: <|im_start|>assistant

Required

Model must call a function, unconstrained

Prefixed with: <|im_start|>assistant<tool_call>

Specified

Model must call a function from a specific subset

Prefixed with: <|im_start|>assistant<tool_call>{"name": "browser_

Consistent tool prefixes (browser_, shell_) allow easy enforcement of tool groups

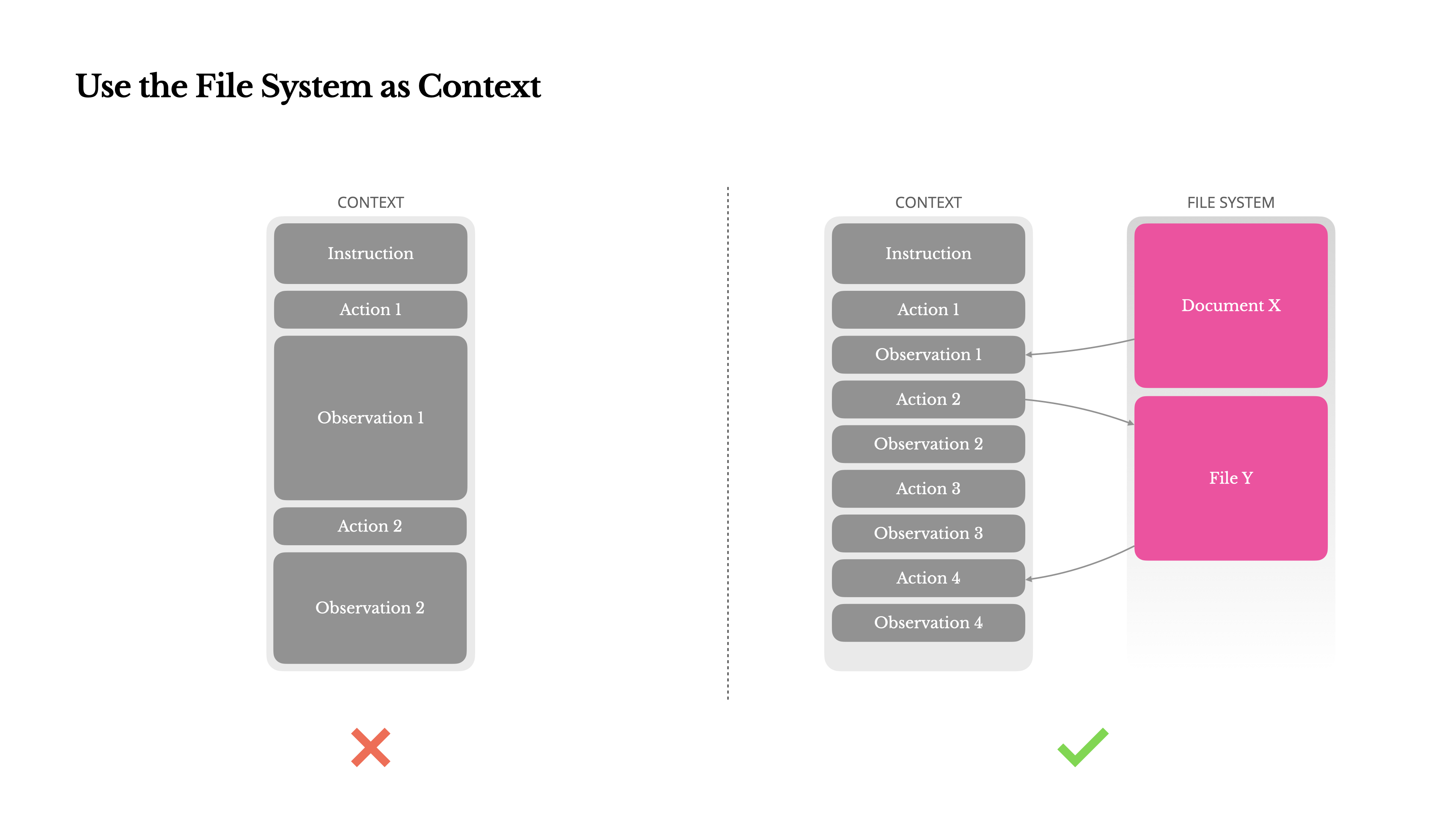

Use the File System as Context

Modern LLMs offer 128K+ token contexts, but in agentic scenarios:

- Observations can be huge (web pages, PDFs)

- Model performance degrades beyond certain context lengths

- Long inputs are expensive, even with prefix caching

Manus treats the file system as ultimate context: unlimited, persistent, and directly operable by the agent

Compression strategies are always designed to be restorable

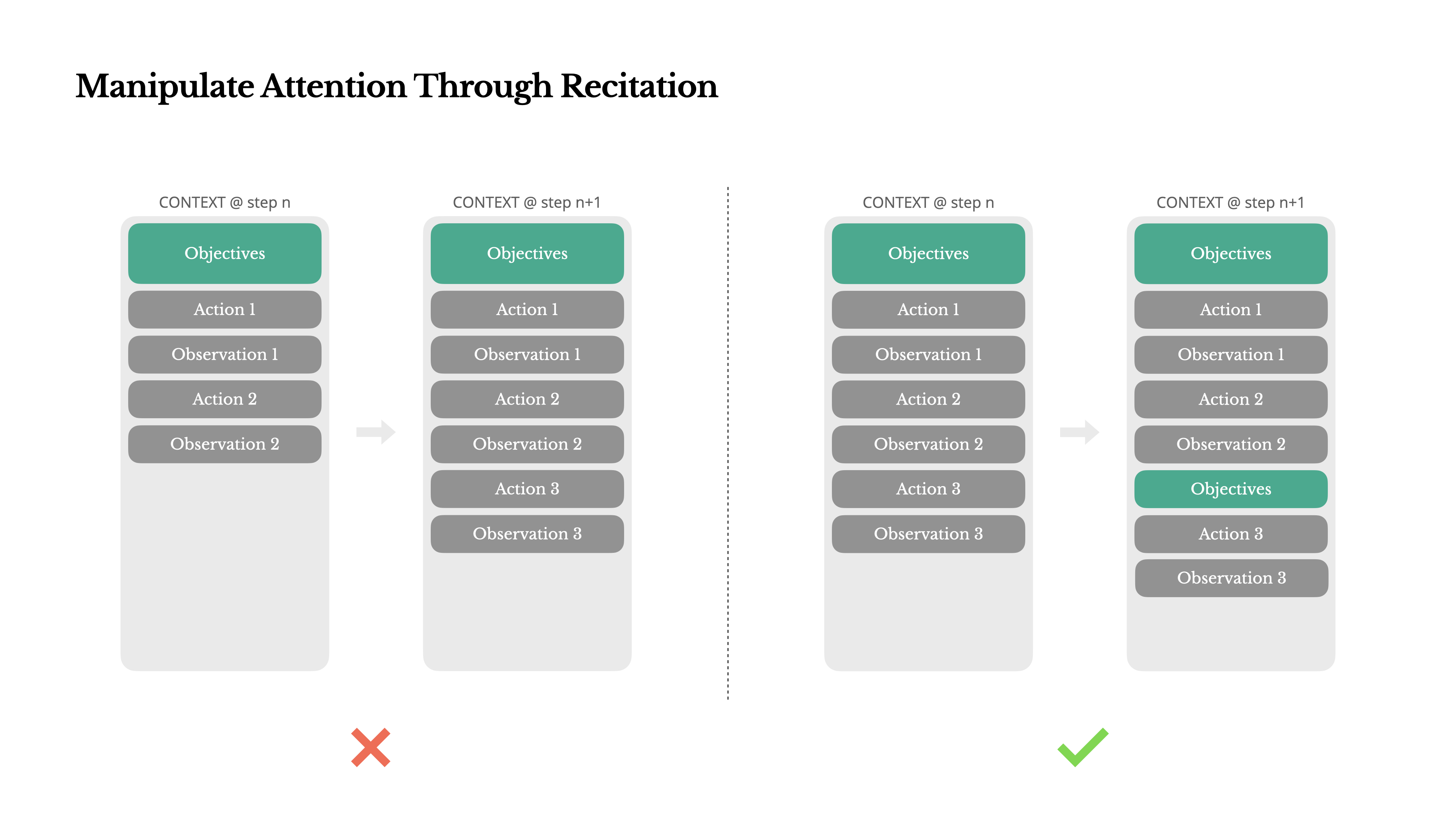

Manipulate Attention Through Recitation

Manus creates and updates todo.md files during complex tasks

Why this matters:

- Typical tasks require ~50 tool calls - a long loop

- Models can drift off-topic or forget earlier goals

By rewriting the todo list, Manus recites objectives into context end, pushing global plan into recent attention span

This avoids "lost-in-the-middle" issues and reduces goal misalignment

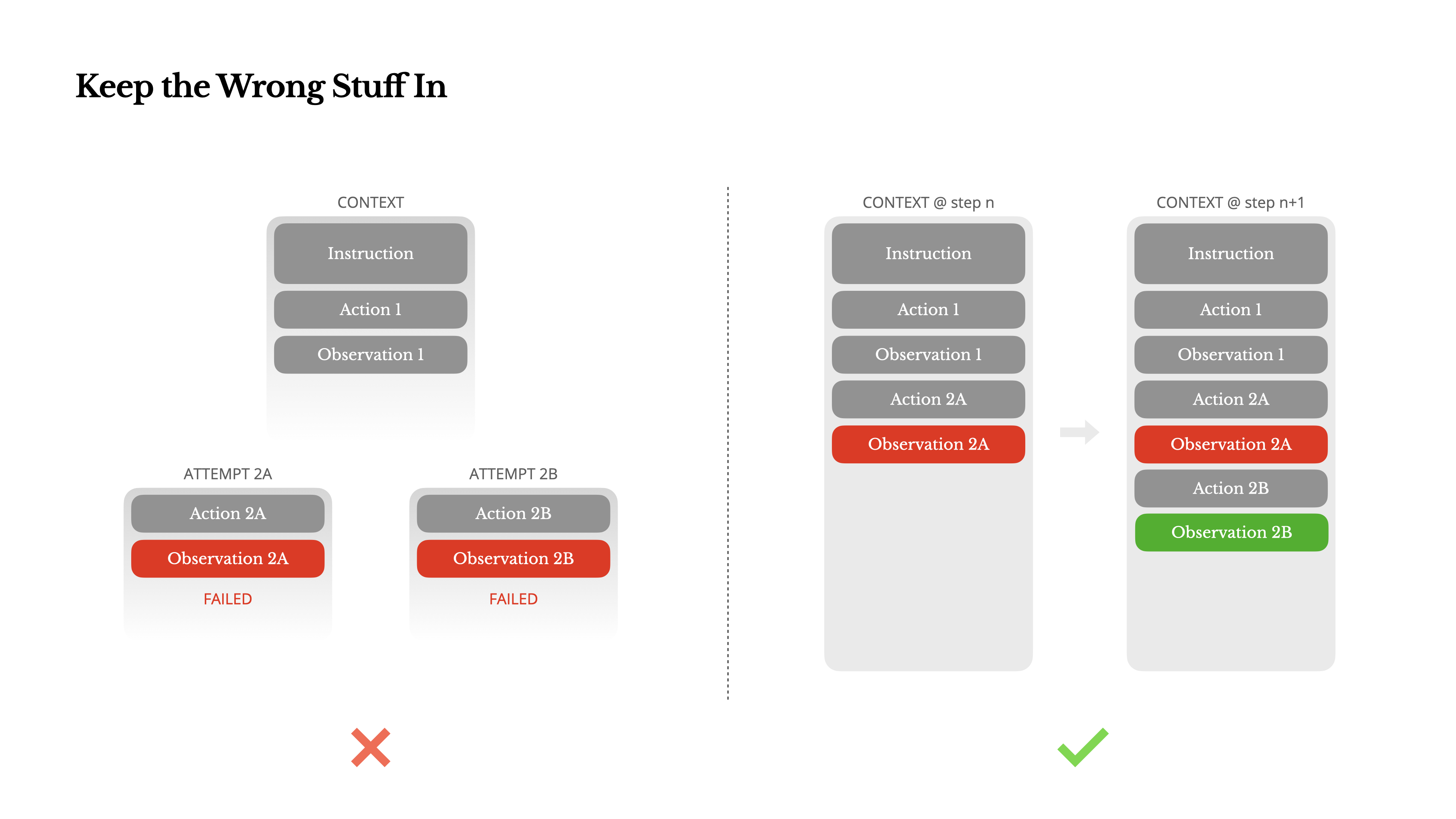

Keep the Wrong Stuff In

Agents make mistakes - that's reality, not a bug

Common impulse: Hide errors, clean up traces, retry actions

Problem: Erasing failure removes evidence

Most effective improvement: Leave wrong turns in context

When model sees failed action + observation, it implicitly updates internal beliefs

Error recovery is one of the clearest indicators of true agentic behavior

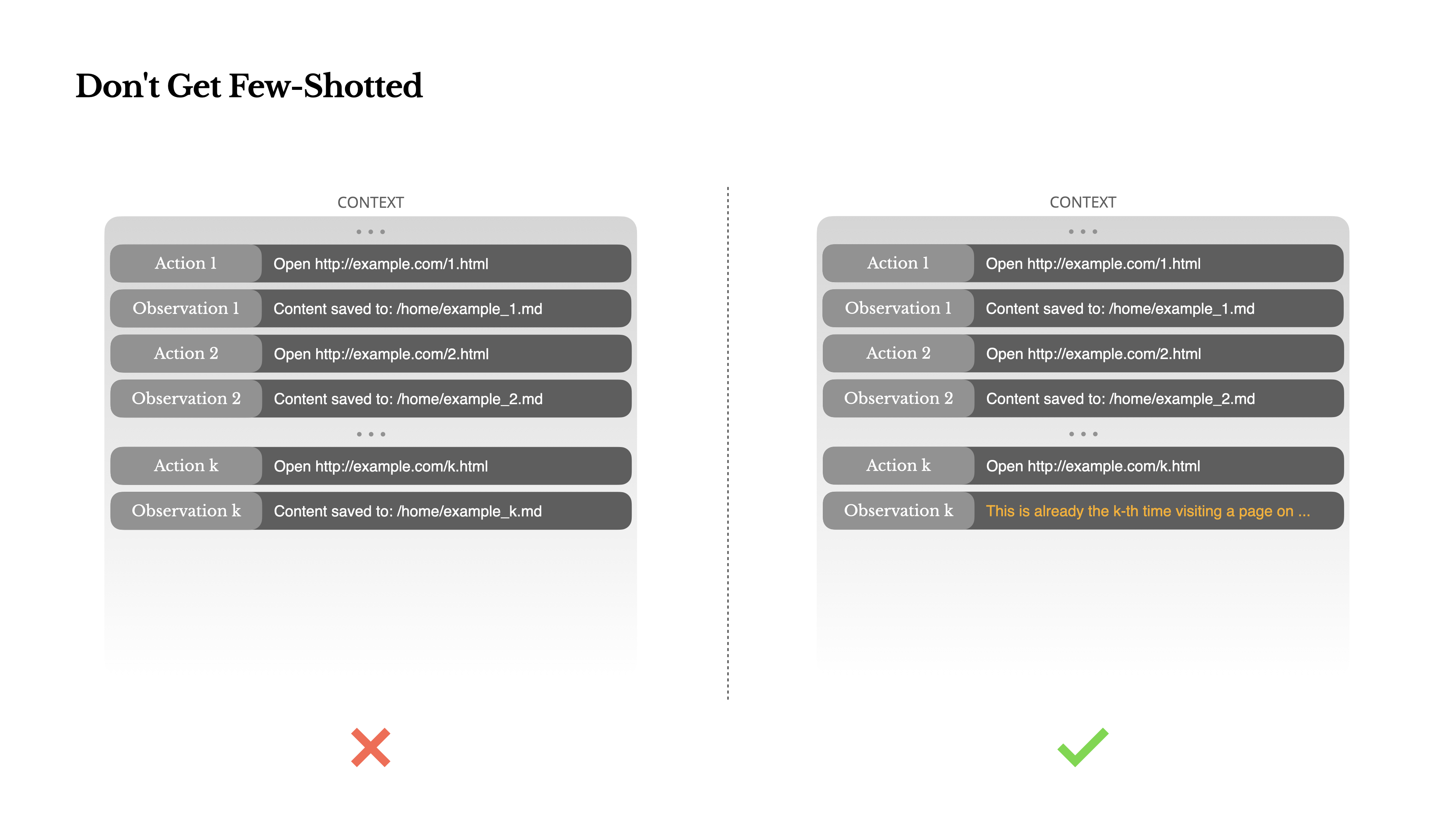

Don't Get Few-Shotted

Few-shot prompting can backfire in agent systems

Language models are excellent mimics - they imitate patterns in context

Danger in tasks with repetitive decisions:

- Agent falls into rhythm, repeating similar actions

- Leads to drift, overgeneralization, or hallucination

Fix: Increase diversity with structured variation in actions and observations

The more uniform your context, the more brittle your agent becomes

Conclusion

Context engineering is still an emerging science—but for agent systems, it's already essential

How you shape the context ultimately defines how your agent behaves:

- How fast it runs

- How well it recovers

- How far it scales

"The agentic future will be built one context at a time. Engineer them well."

These patterns worked for us after repeated rewrites, dead ends, and real-world testing across millions of users